high-tech devices that should make our life more agreeable, safe and

pleasant. Do they succeed? In his book "The Psychology of Everyday

Things", Donald Norman, a leading cognitive psychologist, warns us about

the technology paradox: innovation technology risks making our life

more complex every day. The intention of this warning is to make us

conscious of the importance of 'human-centred design', particularly when

we talk about human-computer interaction.

The aim of Natural Human-Computer Interaction (NHCI) research is to

create new interactive frameworks that integrate human language and

behaviour into tech applications, focusing on the way we live, work, play

and interact with each other. Such frameworks must be easy to use,

intuitive, entertaining and non-intrusive. They must support interaction

with computerized systems without the need for special external input-

output equipment like mice, keyboards, remote control or data gloves.

Instead, these will be replaced by hand gestures, speech, context

awareness and body movement.

An interesting challenge for NHCI is to make such systems

self-explanatory by working on their 'affordance' and introducing simple

and intuitive interaction languages. Ultimately, according to M. Cohen,

"Instead of making computer interfaces for people, it is of more

fundamental value to make people interfaces for computers".

Our research team at the Media Integration and Communication Center

(MICC) is working on natural interactive systems that exploit Computer

Vision (CV) techniques. The main advantages of using visual input in this

context are that visual information allows users to communicate with

computerized equipment at a distance, without the need for physical

contact with the equipment to be controlled. Compared to speech

commands, hand gestures are advantageous in noisy environments, in

situations where speech commands would be disturbed, and for

communicating quantitative information and spatial relationships.

We have developed a framework called VIDIFACE to obtain hand

gestures and perform analysis of smart objects (detection and tracking)

in order to understand their position and movement in the interactive

area. VIDIFACE exploits a monochrome camera equipped with a

near-infrared band-pass filter that captures the interaction scene at thirty

frames per second. By using infrared light, the computer vision is less

sensitive to visible light and is therefore robust enough to be used in

public spaces. A chain of image-processing operations is applied in order

to remove noise, adapt to the background and find feature dimensions,

optimized to obtain a real-time flow of execution.

What Kind of Interfaces?

Our early results concerned vertical screens showing multimedia

contents. Users point at a content display in order to access information:

one significant installation is the PointAt system at the Palazzo Medici

Riccardi museum in Florence. It is considered to be a good vanguard

experiment in museum didactics, and has been functioning successfully

since 2004. With the PointAt system, users can view a replica of the

Benozzo Gozzoli fresco 'Cavalcata dei Magi' in the palace chapel, and

request information on the displayed figures simply by pointing at them.

Information is provided to the user in audio.

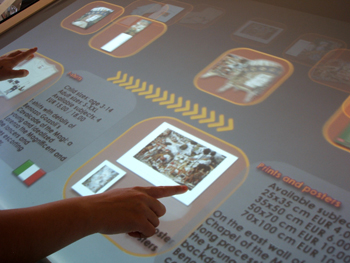

More recently, we have focused our research on TableTop multi-user

frameworks that exploit table-like horizontal screens. This decision was

effected by the concept of 'affordance'. According to D. Norman, this is

the ability of an object to suggest the correct way in which it should be

used. A table is a natural place to share with other people and can also

be used to hold other objects.

Multi-user systems often involve interfaces that are rich in interactive

actions and multimedia content. Our early solution used richer interaction

languages to develop CV algorithms able to recognize different gestures

and hand postures. However, we observed that such a solution causes an

increasing cognitive load for users, who were forced to learn different

gestures before using the system. This went against the concept of a

self-explanatory system.

During the last year we have worked on different solutions for developing

new natural interaction frameworks that exploit a minimal set of natural

object-related operations (eg zoom-in for 2D images, zoom and rotate

for 3D objects, open and turn page for books, unroll for foulards and so

on). An interesting result is our Interactive Bookshop, which was recently

prototyped and will soon be installed in the bookshop of Palazzo Medici

Riccardi in Florence to display artistic objects as digital replicas.

Another solution exploits Tangible User Interfaces (TUIs) to enrich

interaction in the case of hinged interfaces. In our implementation of

TUIs, users employ 'smart' physical objects in order to interact with

Tabletop interfaces. Obviously the employment of such objects must be

as intuitive as possible. The TANGerINE project, developed in

collaboration with the Micrel Lab of the University of Bologna, exploits a

'smart cube': a wireless Bluetooth wooden object equipped with sensor

node (tri-axial accelerometer), a vibra motor, an infrared LED matrix on

every face and a microcontroller. We chose a cube once again because

of its clear affordance: users intuitively consider the uppermost face

'active' (as if reading the face of a dice), thus conceiving the object as

able to embody six different actions or roles.